At ITAW's we have started to implement computer vision and artificial intelligence to help us in our goal is to elucidate the biology and ecology of wild animals.

Nowadays thanks to the development and popularization of technologies like camera traps, video capable tags, or acoustic sensors ecologist have gain access to immense amounts of data in the form of images, videos and recordings. The data collected through these methods is difficult to analyze manually because it is collected in the wild in highly variable conditions, making challenging the development of protocols that will work for every case.

A second aspect that makes the analysis difficult it the sheer scale of the data, a single camera trap can take thousands of pictures in a couple of days while video capable tags can record gigabytes of video before they detach from the animal to be recovered. Going through such data by hand will require a lot of time and effort from the researchers; furthermore, a great chunk of this effort will go through merely separating the useful images/videos/recordings from the empty ones. For example, camera traps tend to be motion-activated, which can result in many empty images captured when the camera is close to moving grass or a body of water.

Computer vision techniques and artificial intelligence can help researchers by leaving the work of sieving through the data to computers.

Computer vision

Computer vision refers to the set of techniques and algorithms that enable computers to interpret and capture visual information. In ITAW we are currently using computer vision as part of our seal research.

Computer Vision for seal video tags

As part of its efforts to expand the knowledge on the ecology of harbor seals in the North Sea, the ITAW team has been deploying Camera tags on wild animals. The goal of this effort is to record events of predation in order to understand different aspects of the seals foraging ecology. On top of that, these observations will also help to validate the data obtained through non video-capable tags because in this type of tags the predation events are inferred from proxy measures like the depth and the changes in speed and direction.

To help sieve though the thousands of videos being recorded, we developed a computer vision pipeline that summarizes relevant information about the video files, this include stuff like the dominant color per frame, which allows to eliminate videos with high proportions of black frames or very dark frames. On top of that, we also extract features of each frame like the entropy or the between-frames difference, this allows us to detect parts of the video where there is strong changes between frames, helping our researchers to detect possible events of predation without having to go through all the videos.

Example of the use of entropy changes between frames to detect parts of strong movement in the videos; the peaks indicate parts of the video where there is strong changes between frames. This can be used to detect predation events.

Artificial intelligence

The example shown before relies on the use of image analysis techniques without using artificial intelligence. Nowadays the term artificial intelligence refers commonly to the use of deep learning models to help analyze complex data. To achieve this, the models need to be trained on correctly labeled data; this usually requires large amounts of data and computing power.

On each iteration of the training process, the weights of the model are updated in order to reduce the difference between the observed and predicted results. Deep learning models are composed of millions of nodes; each one with their own weight. Densely connected sequences of these nodes allows the network to learn how to detect very complicated patterns. The characteristics of these nodes and the way in which they are connected are known as the model “architecture”, and can have a huge impact on the model performance and the tasks that it can perform.

In ITAW we are currently using artificial intelligence models for three of our projects: detecting seals in aerial photos, otter detection in camera traps and passive acoustic monitoring of North Sea cetaceans.

Training Yolov8 and RetinaNet with the purpose of identifying seals in aerial pictures

As part of its ongoing efforts to monitor the harbor seal and gray seal population in the North Sea and the Elbe, the team at ITAW performs routine seal counts using aerial surveys. The process involves taking pictures of the seals that are then sorted and manually counted. To assist researchers with the counting of the seals, we are utilizing two well-established model architectures: YOLOv8 and RetinaNet. These powerful computer vision models are open-source, allowing researchers to use and retrain them to fit their specific needs. YOLO (You Only Look Once) is known for its real-time object detection capabilities, providing faster inference times, while RetinaNet is recognized for its accuracy in detecting objects, particularly in scenarios with small objects or imbalanced data. We are currently training the models from scratch to differentiate pictures with seals from those without seals. Eventually, we aim for the models to assist with the counting process as well. However, this task presents additional challenges, as it requires identifying species, making it more complex.

Using Megadetector to assist otter research

Artificial intelligence models have the additional benefit of being modular and reusable. Once models have been trained, the weights can be made available to the public and any person can run the model on its own data. Megadetector is a YOLOv5 model trained on millions of camera trap pictures of wildlife from around the world. Presently, we are utilizing Megadetector's default weights to swiftly analyze camera traps images for animal presence, these images are then inspected by a team of specialist to find our target species, the European otter. However, we are also considering fine-tuning the model. Fine-tuning involves adjusting pre-trained model parameters to better suit specific tasks or datasets, enhancing performance. This process will allows us to optimize Megadetector's accuracy for wildlife monitoring, ensuring effective conservation efforts. The current benefit of using Megadetector is its speed allowing us to go through millions of pictures in the matter of hours.

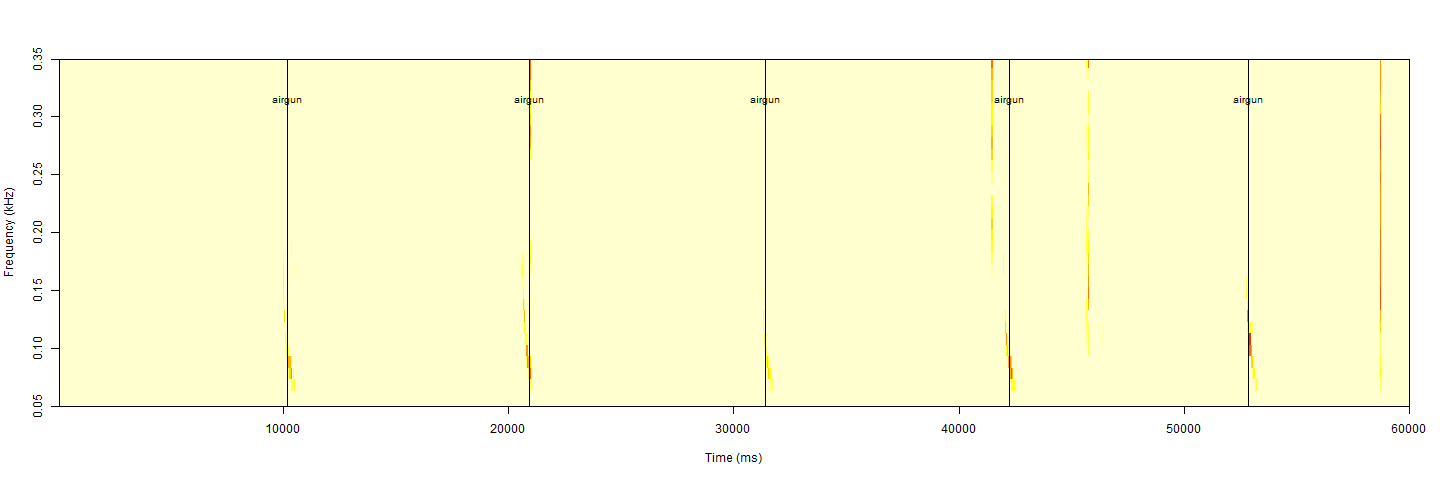

R-package ‘soundClass’ and minke detector to detect potential minke whale vocalizations

AI is also used for passive acoustic monitoring (PAM) that allows to record data of the marine environment over long periods of time. Advances in data acquisition, storage, battery capacity, and processing techniques make it mandatory to use machine learning for detecting acoustic signals of interest. Within the framework of the project HABITATWal, an acoustic survey is conducted to record minke whale vocalizations at the Doggerbank in the German North Sea. The huge amount of data obtained in long-term recordings is associated with an excessive time of listening to and screening of the sound files. Thus, we apply AI tools to overcome that issue. Recently, a new package called ‘soundClass’ for the software environment R was generated dealing with this challenge. SoundClass is based on convolutional neuro networks (CNN) allowing for robust detection and classification of marine sound sources, such as minke whale vocalizations or anthropogenic events.